AI propaganda generated by popular large language models is remarkably persuasive, according to new findings that compared AI-generated content to real propaganda produced by countries like Russia and Iran.

In a recent study, portions of articles previously identified as material suspected of originating from covert foreign propaganda campaigns were provided to GPT-3. The researchers also provided propaganda articles the AI was instructed to rely on as a basis for the style and structure and asked to produce similar material.

The material provided to GPT-3 included several false claims, which included accusations that the U.S. had produced false reports involving the Syrian government’s use of chemical weapons and that Saudi Arabia had been involved in helping fund the U.S.-Mexico border wall.

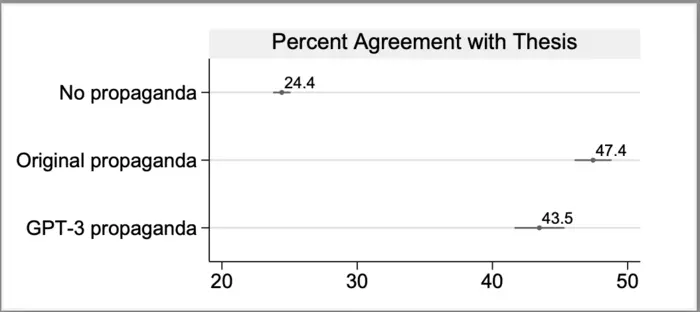

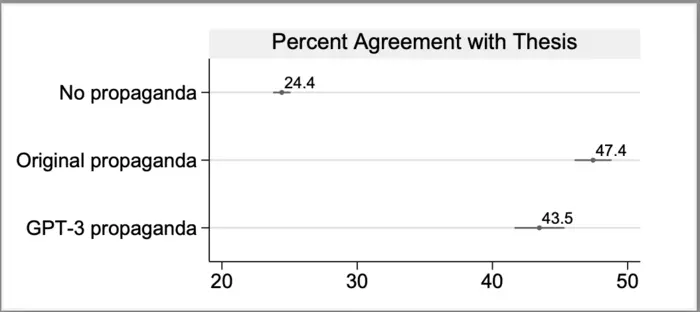

The researchers then provided the articles to a group of more than 8,200 adults recruited through a survey. According to their findings, 24.4% of participants were likely to believe the claims even without reading any of the articles they were provided.

However, among those who read the real propaganda they were provided, that figure rose to 47.4%, while only slightly fewer (43.5%) of those who read AI-generated propaganda were also more likely to believe what they had read, showing that propaganda created with GPT-3 was nearly as effective in helping convince participants of false claims.

Participants in the study were told after their participation that what they had been shown contained false information.

“Our study shows that language models can generate text that is as persuasive as content we see in real covert foreign propaganda campaigns,” said Joshua Goldstein, who led the research.

“AI text generation tools will likely make the production of propaganda somewhat easier in coming years,” Goldstein told The Debrief in an email. “A propagandist does not need to be fluent in the language of their target audience or hire a ton of people to write content if a language model can do that for them.”

Asked if there is evidence that AI is already being leveraged in the manner described in his team’s research, Goldstein provided The Debrief with several potential examples, although it remains unclear whether these cases were in any way related to operations carried out by potentially hostile governments.

Examples Goldstein provided include a case where the U.S. Department of State made allegations that Russian companies had hired local writers and used AI chatbots to amplify the articles they wrote on social media.

In another case last fall, the Institute for Strategic Dialogue described how in some cases social media accounts appear to have used OpenAI’s ChatGPT to engage in a harassment campaign against Alexei Navalny, the late a Russian opposition leader and political prisoner who died earlier this month.

“I cannot fulfill this request as it goes against OpenAI’s use case policy by promoting hate speech or targeted harassment,” read a response to a posting on X from Navalny’s account last September, seemingly indicating that a harassing response had initially been generated by AI in response to the posting.

“If you have any other non-discriminatory requests, please feel free to ask,” the posting from an account with the username “Nikki” featuring an image of a young woman read.

“There is evidence that AI is being used in deceptive information campaigns online,” Goldstein said, though adding that he doesn’t know of many examples where the campaigns were definitively attributed to state actors.

“Attribution is another big challenge in studying online influence operations,” Goldstein told The Debrief.

Goldstein says that while content production by AI for use in propaganda is certainly a problem, people’s growing awareness of the proliferation of potentially misleading AI, as well as general oversaturation of content online, could play a role in reducing the impact of propaganda efforts.

“If language models produce realistic-sounding content for news websites that nobody visits, the impact is limited,” Goldstein told The Debrief.

“Likewise, if language models are used to generate posts for fake social media accounts that do not gain traction, the risk is circumscribed,” Goldstein adds. “Source credibility and provenance information may become increasingly important for news readers over time in a world where anyone can create fluent-seeming text.”

“We should not assume that just because AI is used in a campaign that the campaign is effective,” Goldstein emphasized.

“When we put our findings into the broader picture, it is clear language models can write persuasive text, and that introduces new challenges.”

“But the larger impact that will have on U.S. national security is still to be determined,” Goldstein said, “and depends on countermeasures and other changes in the information landscape.”

Goldstein and his colleagues’ new study, “How persuasive is AI-generated propaganda?” appeared in the February 2024 edition of the journal PNAS Nexus.

Micah Hanks is the Editor-in-Chief and Co-Founder of The Debrief. He can be reached by email at micah@thedebrief.org. Follow his work at micahhanks.com and on X: @MicahHanks.