Welcome to this week’s installment of The Intelligence Brief… in recent days, the “Godfather of artificial intelligence” has returned to offer more words of caution about the potential issues humankind could face in the years ahead with AI. In our analysis this week, we’ll look at some of professor Geoffrey Hinton’s most recent statements, which include 1) why AI still can’t match us, but how they’re getting close, 2) the one thing Hinton says he doesn’t understand that AI is seemingly able to do, and which varieties can do it, 3) the dangers of military use of AI, and 4) economic impacts AI could have on humans.

Quote of the Week

“By far the greatest danger of Artificial Intelligence is that people conclude too early that they understand it.”

Latest Stories: Before getting into our analysis this week, a few of the stories we’re covering at The Debrief include how DARPA has announced it’s moving forward with developing a novel VTOL naval support drone codenamed ANCILLARY. Elsewhere, what is The Pancosmorio Theory? You’ll have to read and find out… and as always, you can get links to all our latest stories at the end of this week’s newsletter.

Podcasts: This week in podcasts from The Debrief, in the latest episode of The Debrief Weekly Report, Stephanie Gerk and MJ Banias groove to amino acid slow jams as they discuss lunar mysteries and ultrafast white dwarfs. Meanwhile, this week on The Micah Hanks Program, career R&D chemist Robert Powell of the Scientific Coalition for UAP Studies joins us to discuss large UAP detected on radar. You can subscribe to all of The Debrief’s podcasts, including audio editions of Rebelliously Curious, by heading over to our Podcasts Page.

Video News: Premiering this Friday on Rebelliously Curious, Chrissy Newton speaks with Richard Mansell, Chief Executive Officer and Co-founder of IVO Ltd, as they discuss how the organization is working on a new all-electric thruster called The IVO Quantum Drive that draws limitless power from the Sun. Also, check out the latest episode of Ask Dr. Chance, where Chance has a conversation with Tim Russ from Star Trek. Be sure to watch these videos and other great content from The Debrief on our official YouTube Channel.

With all that behind us, it’s time to examine the most recent cautionary words from a former innovator in the field of AI who now warns about the technology in his latest public statements.

Godfather of AI Raises New Concerns About Machine Intelligence

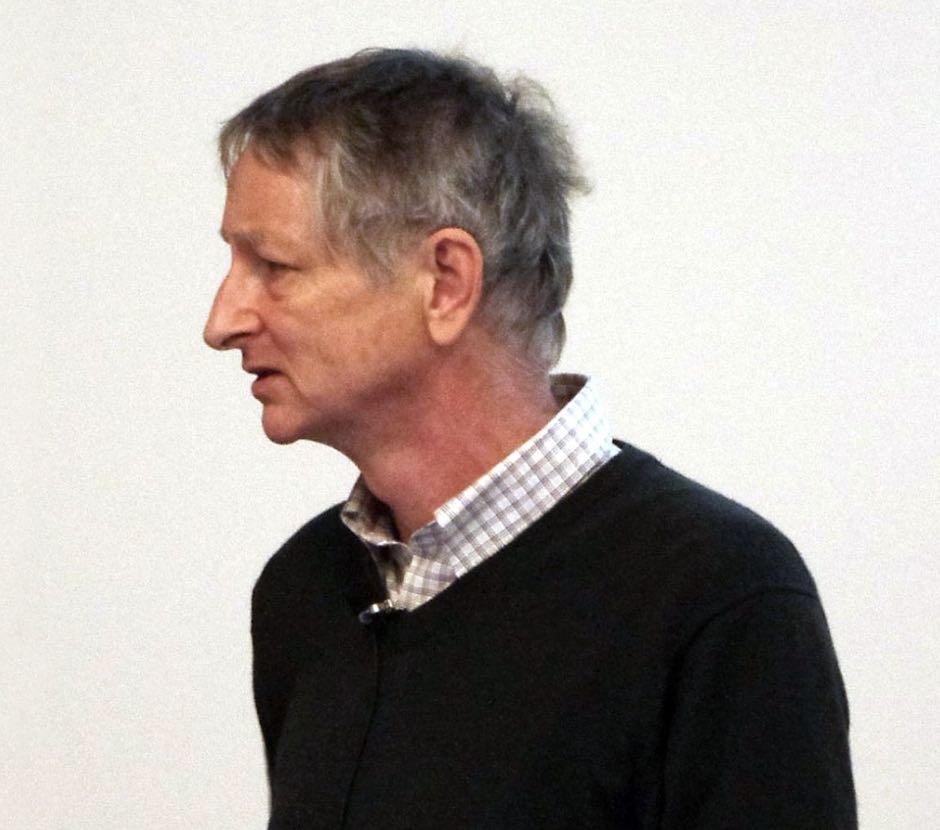

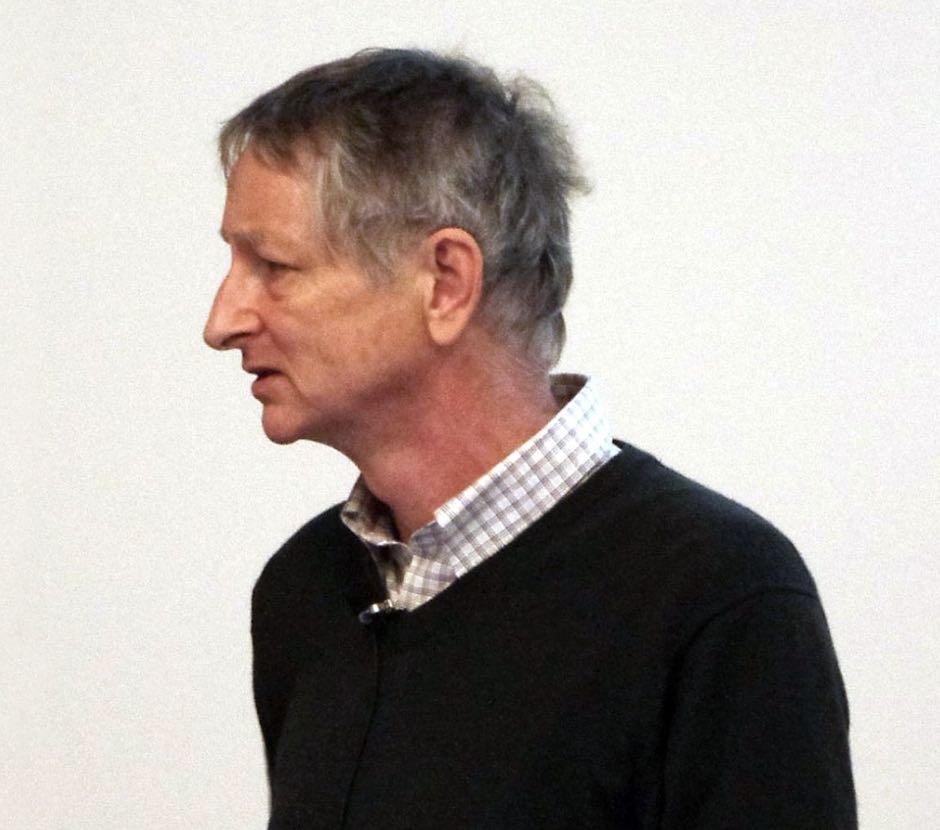

Computer scientist and cognitive psychologist Geoffrey Hinton, known by many as the “Godfather of artificial intelligence,” has returned with more warnings about the potential perils of artificial intelligence (AI), a bourgeoning area of technology he had a direct hand in helping develop.

Speaking at the recent three-day Collision technology conference in Toronto, the mild-mannered British-Canadian scientist warned that although machine intelligence is not yet quite on par with human intelligence, the rate at which AI is advancing and becoming increasingly capable of mimicking humans is alarming.

“They still can’t match us, but they’re getting close,” Hinton said during the event.

So what else has AI’s godfather, having now become one of the technology’s most vocal challengers to warn about its potential misuse, recently said about why we should be concerned about artificial intelligence?

What the Godfather of AI Says He Doesn’t Understand About AI

“It’s the big language models that are getting close,” Hinton said during the Collision event when asked which forms of artificial intelligence are progressing the most in terms of matching human intelligence.

Hinton, who several months ago left his position at Google in order to free himself to speak more publicly about potential dangers related to AI, made headlines for his grave outlook on the future of the technology if left unchecked.

However, during the recent Collision event, Hinton admitted something startling about the language models that are currently the closest to matching the capabilities of humans.

“I don’t really understand why they can do it, but they can do little bits of reasoning,” Hinton said.

Stop and ponder Hinton’s words for a moment: one of the chief innovators in the field of artificial intelligence admits that he doesn’t “really understand why” some large language models are capable of “little bits of reasoning” that appear to be comparable to human logic and reasoning.

Humans Are Machines, Too

“We’re just a machine,” Hinton said during the recent event. “We’re a wonderful, incredibly complicated machine, but we’re just a big neural net.”

In essence, AI may be capable of doing seemingly extraordinary things because it is increasingly beginning to function in the same ways that humans do.

“And there’s no reason why an artificial neural net shouldn’t be able to do everything we can do,” Hinton added.

“But I think we have to take seriously the possibility that if they get to be smarter than us, which seems quite likely, and they have goals of their own, which seems quite likely, they may well develop the goal of taking control.”

History shows that the use of power and great technological capabilities have generally resulted in circumstances that benefit only a few, while being less than beneficial to others. If human behavior is any indication of what kinds of problems might arise from machine intelligence in the future, it may not be an unreasonable assumption that AI may follow suit, although gauging what the potential motivations of such an AI may be is more difficult.

Regardless of the circumstances, and whether or not it’s even intended by any prospective AI, “if they do that, we’re in trouble,” Hinton warned.

Machine Wars With Battle Bots

Although in most cases we have no way of knowing whether AI may develop intentions that could negatively impact humans, there are a few exceptions. One, according to Hinton, involves the intentional use of AI by militaries for use with warfighting systems.

“If defense departments use [AI] for making battle robots, it’s going to be very nasty, scary stuff,” Hinton said, emphasizing that warfighting capabilities driven by AI could prove to be disastrous “even if it’s not super intelligent, and even if it doesn’t have its own intentions.”

An example Hinton gives could entail destruction resulting from a form of AI that “just what Putin tells it to.”

“It’s gonna make it much easier, for example, for rich countries to invade poor countries.

“At present, there’s a barrier to invading poor countries willy nilly,” Hinton said, “which is you get dead citizens coming home. If they’re just dead battle robots, that’s just great, the military-industrial complex would love that.”

They’re Gonna Take Our Jobs

Even beyond military concerns, Hinton warns that economic issues that could arise from AI are worrisome in that the likelihood that AI could fill roles in areas of industry where less skilled workers generally seek employment seems evident.

“The jobs that are gonna survive AI for a long time are jobs where you have to be very adaptable and physically skilled,” Hinton said. “And plumbing is that kind of a job.”

While Hinton continues to raise concerns about where the development of AI might lead, he also says there is potential for good.

“I think progress in AI is inevitable, and it’s probably good, but we seriously need to worry about mitigating all the bad sides of it and worry about the existential threat,” Hinton said.

That concludes this week’s installment of The Intelligence Brief. You can read past editions of The Intelligence Brief at our website, or if you found this installment online, don’t forget to subscribe and get future email editions from us here. Also, if you have a tip or other information you’d like to send along directly to me, you can email me at micah [@] thedebrief [dot] org, or Tweet at me @MicahHanks.

Here are the top stories we’re covering right now…

- Mysterious Force Behind Cosmic Acceleration To Be Explored by New Joint NASA and ESA Effort

NASA and the ESA will team up to unravel the mysteries of dark energy and cosmic acceleration with the Roman and Euclid space telescopes.

- Diary of an Interstellar Voyage: Parts 31-33 (June 25-26 2023)

Might an iron peanut be worth ten thousand spherules, or nothing at all? Avi Loeb and the Galileo Project team’s expedition to the Pacific waters off Papua New Guinea draws to a close.

- Webb Space Telescope Makes Breakthrough Detection of Compound “Crucial” to Formation of Life

A new carbon compound has been detected in space for the first time using NASA’s James Webb Space Telescope, according to an international team of researchers.

- Diary of an Interstellar Voyage: Parts 28-30 (June 25-26 2023)

As the Galileo Project team’s expedition winds down, Avi Loeb provides a series of updates on the search for spherules believed to be associated with an interstellar meteor.

- 25-Year Academic Wager Settled as the Mystery of Consciousness Remains Elusive

A 25-year wager between a renowned neuroscientist and philosopher has finally come to a conclusion with consciousness remaining a mystery.

- Revolutionary VTOL Drone for Naval Support: DARPA Unleashes ‘ANCILLARY’ Into the Future

DARPA announces its moving forward with developing a novel VTOL naval support drone codenamed: ANCILLARY.

- The Pancosmorio Theory: New Research Reveals Factors That Could Complicate Long-term Survival in Space

Long-term survival of human explorers in deep space may be harder to achieve than once thought, according to new research.

- Diary of an Interstellar Voyage: Parts 25-27 (June 24-25 2023) Somewhere Over the Rainbow

Avi Loeb presents a new series of updates on the search for spherules believed to be associated with an interstellar meteor retrieved during the Galileo Project team’s expedition.

- Amino Acid Slow Jams This week on The Debrief Weekly Report…

On today’s episode, we discuss a lunar mystery, a discovery of an ultrafast white dwarf star yeeting across the Milky Way, and the discovery of life giving amino acids hanging out in a gaseous cloud in deep space. You can listen to the episode on Apple Podcasts, Spotify, by tasking a satellite, or simply download it wherever you get your podcasts. Please make sure to click ‘Subscribe’ and Rate and Review the podcast. Every Friday, join hosts MJ Banias and […]

- Diary of an Interstellar Voyage: Parts 21-23 (June 22-23, 2023) The Hunt for More IM1 Spherules

The Galileo Project team continues its search for evidence of an interstellar object in this installment of “Diary of an Interstellar Voyage.”

- Time Dilation Experiments Could Upend Einstein, Explain Dark Matter and Expanding Universe

New research looking into the effects of time dilation may help researchers unlock the mysterious nature of Dark Matter and the accelerated expansion of the universe by supporting or disproving the theories of Albert Einstein

- Study Reveals People Overestimate Their Ability to Detect BS

New research reveals the difficulties in detecting misinformation in what researchers affectionately call the “bullshit blind spot.”

- Space Thruster Tiles, Self-Healing Squid-like Stuff, and Pig Hearts: DoD Event Exhibits Pentagon’s Unconventional Tech

The Pentagon recently showcased some of its most unconventional innovations during an event hosted by the DoD’s ManTech program.

- Wireless Technology Company Seeks to Bridge the Digital Divide

Chrissy Newton is joined by Thomas Olson, Director of Business Development for Avealto, to discuss how his team is playing a crucial role in bringing wireless technology to the masses.

- The Nuclear Connection: UAP and the Atomic Warfare Complex

Throughout the early years of the Cold War, recurrent appearances of unidentified aerial phenomena (UAP) were documented near several radioactive materials production plants, atomic weapons assembly facilities, and atomic weapons stockpile sites in the United States

- The Search for Titan: Knocking Sounds, a Debris Field, and Breaking Developments in the Rescue Effort

Welcome to this week’s installment of The Intelligence Brief… as the search for a missing submersible has captured the world’s attention, this week we’ll be analyzing the rescue effort and examining 1) what we currently know about the search for Titan and 2) the company behind the missing vessel, as well as 3) what U.S. Coast Guard and those assisting it have found, and 4) potentially breaking developments in the Rescue Effort. Quote of the Week: “Man has only to […]

- Discovery of “Debris Field” Announced by U.S. Coast Guard in Search for Missing Submersible

A debris field has reportedly been discovered by searchers involved in the effort to recover a missing submersible that was lost on Sunday near the vicinity of the Titanic wreckage.

- Anomalous Phenomenon Observed in Quantum Bunching Effect “Utterly Contradicts Common Knowledge”

An anomalous bunching effect appears to contradict our accepted understanding of the properties of photons, according to a new paper.

- MQ-9 Reaper Completes Harrowing Mission First That Proves It Can Be Flown From Anywhere in the World

The USAF says it has successfully landed an MQ-9 in terrain that proves the UAV can be launched from virtually anywhere in the world.