A novel exosuit design utilizes artificial intelligence to decode brain signals from users with spinal cord injuries or who have suffered strokes, aiming to redefine the landscape of neuro-rehabilitation and assistive devices.

Called the Wyss Synapsuit, it is positioning itself to be the wearable technology that offers new hope for restoring functional mobility and improving the quality of life for those with severe upper-limb motor disabilities.

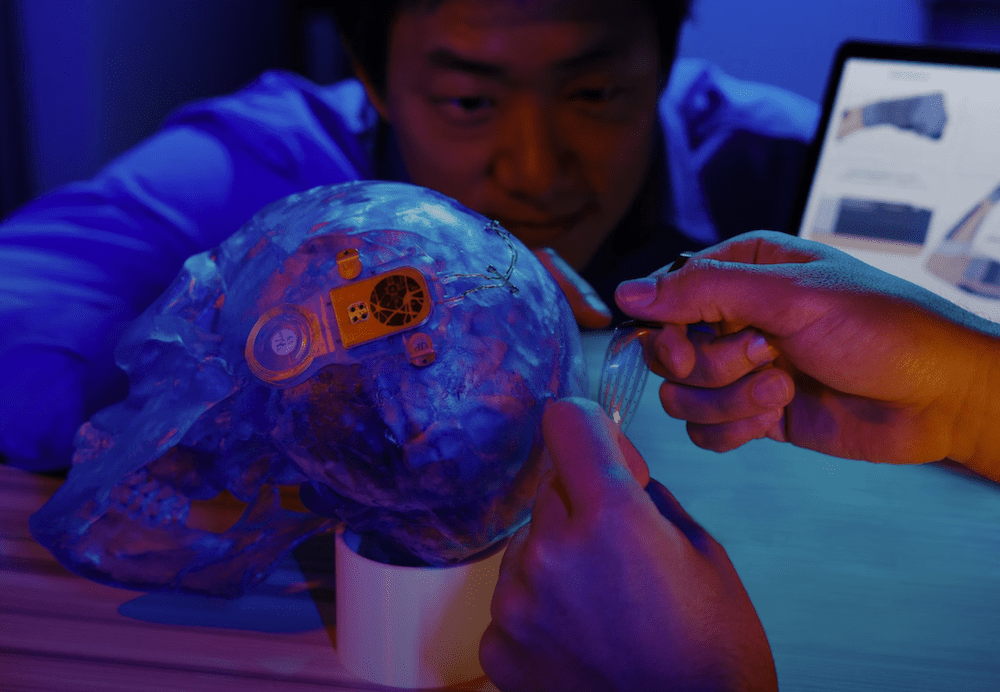

The Synapsuit’s science fiction-like heart is the Neuro-AI decoder, a sophisticated AI algorithm developed by researchers at the Wyss Center. This advanced deep-learning method is designed to interpret complex brain signals in real time, even when signals are sporadically missing, a common challenge in wireless brain-computer interfaces (BCIs).

The decoder leverages machine learning techniques and signal processing methods to extract meaningful information from brain signals.

The Synapsuit project is a collaborative effort involving local and international partners, including the Korea Electronics Technology Institute, Neurosoft Bioelectronics, and notable professors from the Swiss Federal Institute of Technology in Lausanne and Lausanne University Hospital.

Exosuits, AI, and the Future of Medical Care

“Neuroscience is rapidly merging with AI, allowing us to discover important patterns hidden inside seemingly chaotic brain signals,” explained Dr. Kyuhwa Lee, a principal investigator on the project with the Wyss Center. “Using cutting-edge machine learning approaches, we aim to translate movement intentions into action for people living with movement disorders following spinal cord injury and stroke.”

The Neuro-AI decoder operates by recording brain signals using soft, foldable, and flexible electrodes that conform to neural tissues. Smaller than 1mm, these rest on the brain, and feed electrical signals into the decoder, which interprets the user’s intentions to move their arm or hand. The decoded signals are then converted into commands for the Synapsuit, a fully flexible, soft exosuit. The exosuit, in turn, uses electrical current through transcutaneous neurostimulation to control the muscles responsible for arm and hand movements.

It’s this feedback loop that makes the suit unique. Unlike other AI and BCI powered robotic limbs, the Synapsuit sends signals to the user’s muscles, making them move and engage. The team integrated an electrostatic clutch (ES-clutch) material that then allows the user to maintain the given posture without causing fatigue.

In simple terms, the suit will support the user’s muscles in a given position. If the user wishes to flex their arm, they think it, and the suit will stimulate those muscles to achieve that position. Ready to unflex that arm? Think it, and the suit will stimulate those muscles and the arm will release back into its natural state.

This combination of AI algorithms and clutch material aims to support the movements of arms and hands in real-time, translating movement intentions into action and accelerating neuro-rehabilitation methods.

MJ Banias is a journalist who covers security and technology. He is the host of The Debrief Weekly Report. You can email MJ at mj@thedebrief.org or follow him on Twitter @mjbanias.