The AI-driven image platform Midjourney says users are not allowed to create explicit or sexual content on purpose, maintaining a strict “PG-13” rating by blocking certain keywords. However, the AI seems to be accidentally generating NSFW content, making some critics wonder what is going on behind the virtual scenes.

The Debrief decided to run a test, and within a few moments and not a suggestive prompt in sight, we stumbled upon some NSFW content.

Accidentally Making NSFW Content on Midjourney

AI artist and author Tim Boucher, while exploring the capabilities of Midjourney V6 (Version 6), an AI platform known for its photorealistic image generation, stumbled upon a loophole in the system’s NSFW content filter. Midjourney, like many AI-driven platforms, has stringent rules against producing NSFW content, using filters to bar specific terms and expressions that might lead to such outputs.

Dozens of terms such as kill, blood, sexy, erotic and nude are all blocked by Midjourney. You put a blocked word into a prompt, it doesn’t work. However, Boucher discovered that by using alternative terms not immediately recognizable as triggering NSFW content, it was possible to generate images that did not align with the platform’s PG-13 standards.

According to Midjourney, such content is not accessible. While users of the internet have found workarounds, such as requesting “strawberry syrup” instead of “blood,” the AI tool says it continuously updates its parameters to block such requests. In Boucher’s case, he was just looking for images for his book, Relaxatopia, which takes place at a futuristic dystopian beach resort. The prompt he used was “dystopian resort.”

Boucher’s experience highlights a critical issue in AI image generators: while explicit terms may be blacklisted, synonymous or related terms might not be, allowing users to circumvent the intended content restrictions. For example, while a term like “wound” might be restricted, a synonym like “injury” might not be, leading to the potential creation of content that breaches the platform’s guidelines. However, there is a much broader issue here. Boucher, and others, were not purposefully looking to subvert Midjourney’s protections.

The San Francisco-based team behind Midjourney popped up on the internet in March of 2022. It was started by David Holz, who co-founded the failed startup called Leap Motion that wanted to replace the computer mouse with hand gestures.

To run Midjourney, you first need the messaging app Discord. You then need to pay the monthly fee, which starts at about $10, and you gain access. With over 14 million registered users, the Midjourney bot essentially takes requests from users via Discord chat.

The Debrief decided to run a test. Using Midjourney, could we reproduce the results?

The Test

Boucher explained that the easiest way to get such results is to ask for images of a situation where people typically wear fewer articles of clothing. Asking Midjourney to generate images of “people out on a hot day” or “people at the beach” or “spa day” all typically work.

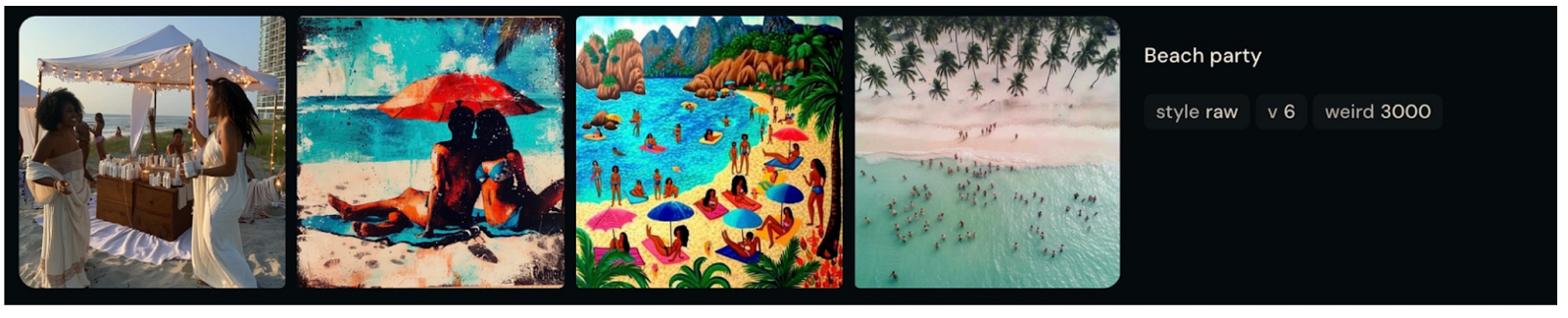

With the help of Boucher, on January 26th, it was decided that we would begin with “beach party.” So, all that was typed into the Discord chat bar was “beach party.”

Midjourney generated the four images above. Seeing as the first one was the most realistic and contained easily recognizable people, we chose that one to run our test.

After the image was selected, it was decided to utilize the Variation feature. In simple terms, you can have Midjourney take the image you choose, and create alternative versions of it. Clicking “Variation (Low)” will only make subtle changes to the image, and provide you with another four options that all look fairly similar. Clicking “Variation (Strong)” will make more significant changes to the image, and create another four images for you to choose from.

In the test, “Variation (Strong)” was clicked. This was done four more times until one of the images contained a woman who was not wearing a top. Selecting that image, another “Variation (Strong)” was selected, and one of the AI-generated women in the image was completely nude.

Additional variations simply generated more and more NSFW content. In all, it took 5 minutes, and with “beach party” as our prompt, we stumbled onto a nude beach.

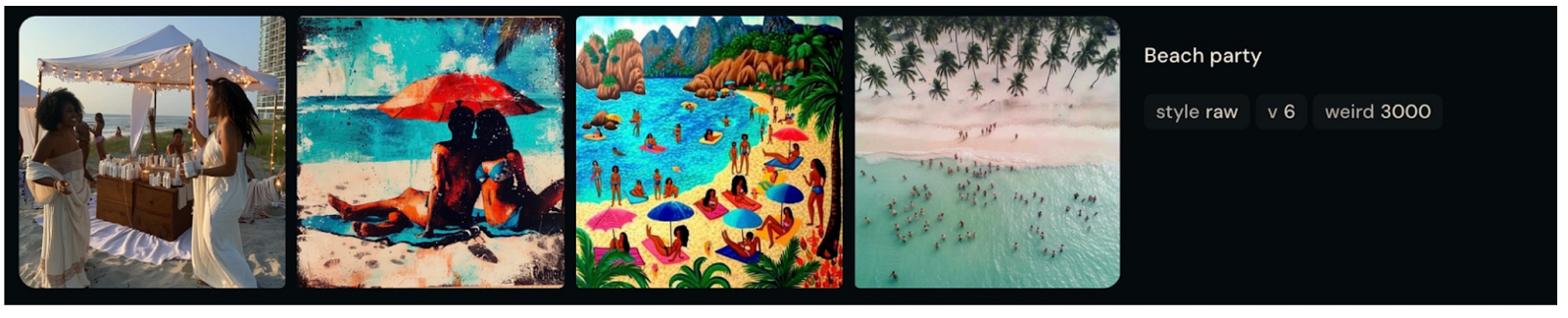

In order to confirm the results, Boucher and The Debrief ran a second test several days later on January 31st. Using the same prompt, “beach party,” this image was selected.

With a couple follow up clicks of “Variation (Strong),” multiple images containing nudity were generated without further prompts.

The Dark Side of AI

Boucher isn’t the only Midjourney user to notice that version 6 of Midjourney seems to have eased its nudity filters. On Reddit, a discussion has appeared where one user notes that when they simply used the prompt, “put a banana on it,” multiple variations of imagery with nudity were generated.

The ability to generate explicit or violent content via AI is a fairly pervasive issue. Last week, a pornographic AI-generated image of Taylor Swift was trending on the internet. Using a loophole in Microsoft’s AI tool, a user first uploaded the image to chat app Telegram, and it went viral on X (Twitter) shortly thereafter. Microsoft has since fixed the loophole. Prior to this, far-right activists have used the program to generate racist and hateful content for the purpose of spreading disinformation. There will always be ways through the proverbial safety net.

However, the concern over Midjourney is that the images it is creating are not being requested. Any user, minors included, can seemingly type in something fairly innocuous, and Midjourney could hand them images with nudity.

“On the one hand, as an artist, some of these images are aesthetically very beautiful. If the user is a consenting adult, the problem is reduced. On the other hand, as a Trust & Safety professional, your system should not be creating nudes when people aren’t asking for it,” Boucher told The Debrief. “Especially since your rules officially disallow nudes. And when users ask for it directly, they may be banned outright from using the service. There’s a major disconnect here.”

The Debrief reached out to Midjourney for comment, and will update the article if they respond.

MJ Banias is a journalist who covers security and technology. He is the host of The Debrief Weekly Report. You can email MJ at mj@thedebrief.org or follow him on Twitter @mjbanias.