In a demonstration of cutting-edge neuroscience and engineering, researchers have achieved something once thought to be science fiction—a brain-computer interface (BCI) that allowed a paralyzed individual to successfully pilot a virtual quadcopter using only his mind.

The feat marks a transformative moment in the field of assistive technologies, showcasing how innovation can bridge the gap between physical limitations and unprecedented possibilities.

The study, recently published in Nature Medicine, reveals the advanced capabilities of a novel intracortical BCI that decodes brain signals into precise finger movements, all achieved through thought alone.

Previous studies have demonstrated the potential for brain-computer interfaces to allow someone to engage in simple tasks, like eating or even playing a video game.

However, for the first time, researchers demonstrated the real-time application of brain-computer interface technology to allow a participant with complete paralysis to seamlessly control a virtual drone through a series of complex maneuvers.

This not only addresses significant unmet needs for individuals with disabilities but also opens doors to a host of practical applications for BCIs.

“Being able to move multiple virtual fingers with brain control, you can have multifactor control schemes for all kinds of things,” Stanford professor of neurosurgery and co-author of the study Dr. Jamie Henderson said in a press release. “That could mean anything, from operating CAD software to composing music.”

The study participant, a 69-year-old man known as “T5,” had suffered a spinal cord injury that left him unable to use his limbs. Using microelectrode arrays implanted in the motor cortex—the brain region responsible for voluntary movement—researchers developed a system capable of decoding specific finger movements.

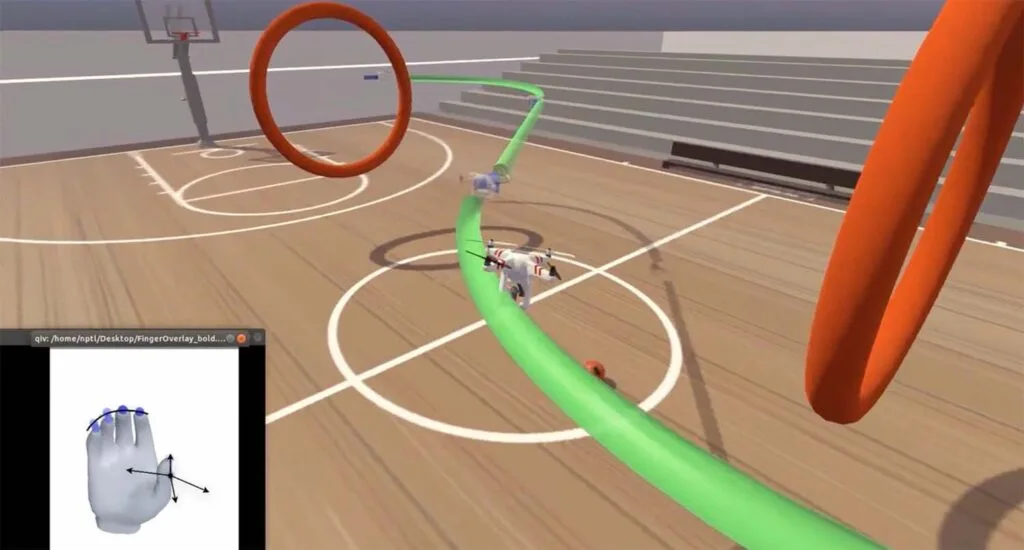

T5 could control a virtual drone with astonishing dexterity by translating these neural signals into digital commands, navigating through obstacle courses, and performing precision tasks in a virtual environment.

According to the study’s lead author, Dr. Matthew Willsey of the University of Michigan, the BCI system represents a significant leap forward in assistive technology.

“It takes the signals created in the motor cortex that occur simply when the participant tries to move their fingers and uses an artificial neural network to interpret what the intentions are to control virtual fingers in the simulation,” Dr. Willsey said. “Then we send a signal to control a virtual quadcopter.”

“This is a greater degree of functionality than anything previously based on finger movements.”

The brain-computer interface operates through a series of silicon microelectrode arrays, each with 96 channels that record neural activity in the motor cortex.

The system then uses advanced algorithms to decode these signals, interpreting them as movements of three distinct finger groups, including thumb movements in two dimensions. This breakthrough allows for four degrees of freedom, enabling fine control of the virtual drone.

For T5, the system’s intuitive nature was key. As he visualized moving his fingers, the BCI seamlessly interpreted his brain activity to control the drone’s velocity, direction, and orientation. Unlike earlier systems that focused on simple cursor control or robotic arm manipulation, this BCI leveraged the natural dexterity of imagined finger movements to achieve unprecedented precision and responsiveness.

The implications of this research extend far beyond its technological achievements. According to surveys, individuals with paralysis often report unmet needs for social engagement, recreational activities, and a sense of personal empowerment.

The significance of video games and virtual environments for people with disabilities has been widely documented. Multiplayer games, in particular, offer a sense of social connectedness and enablement.

In the future, by integrating BCI technology into such platforms, individuals with motor impairments could participate fully in gaming, remote work, and other digital activities—not just as passive users but as active contributors.

“People tend to focus on restoration of the sorts of functions that are basic necessities—eating, dressing, mobility—and those are all important,” Dr. Handerson said. “But oftentimes, other equally important aspects of life get short shrift, like recreation or connection with peers. People want to play games and interact with their friends.”

The road to this achievement was not without hurdles. Early trials faced decoding accuracy and responsiveness limitations, particularly when controlling multiple degrees of freedom.

However, through iterative improvements and machine learning enhancements, the system now achieves a target acquisition rate of 76 targets per minute with nearly 100% success in completing tasks. These metrics exceed those of previous brain-computer interface systems, which often struggled with reliability and speed.

Moreover, T5’s feedback during the trials played a pivotal role in refining the system. For example, visual cues, such as a hand graphic displaying finger positions, improved his ability to control the drone intuitively.

“It’s like riding your bicycle on your way to work,” T5 remarked, highlighting the system’s ease of use after initial training. “What am I going to do at work today and you’re still shifting gears on your bike and moving right along.”

Despite its success, researchers acknowledge that this technology is still in its early stages. The current system relies on invasive implants and laboratory-based setups, making it inaccessible for widespread use. However, advancements in non-invasive BCIs and miniaturized hardware could pave the way for more practical applications in the near future.

The implications of this breakthrough are profound. Beyond recreational use, the same principles could be applied to control prosthetic limbs, operate smart home devices, or interact with digital interfaces in everyday life. For individuals with severe disabilities, such applications could drastically improve their quality of life and foster greater independence.

T5’s experience underscored the human impact of this technology. He expressed a renewed sense of agency as he navigated the virtual drone through hoops and obstacles.

“T5 expressed themes of social connectedness, enablement, and recreation during BCI control of the quadcopter,” researchers wrote. “He felt controlling a quadcopter would enable him, for the first time since his injury, to figuratively ‘rise up’ from his bed/chair.”

“The quadcopter simulation was not an arbitrary choice, the research participant had a passion for flying,” co-author and computer scientist at Stanford University, Donald Avansino, added. “While also fulfilling the participant’s desire for flight, the platform also showcased the control of multiple fingers.”

Ultimately, as the field of brain-computer interfaces continues to evolve, the possibilities are boundless. In the future, BCIs could enable individuals to play musical instruments, design 3D models, or even pilot real-world drones.

This fusion of neuroscience, artificial intelligence, and engineering could redefine what’s possible for people with disabilities and the technology sector as a whole.

“Controlling fingers is a stepping stone,” co-author and professor of electrical and computer engineering at Rice University, Dr. Nishal Shah, explained. “The ultimate goal is whole body movement restoration.”

Tim McMillan is a retired law enforcement executive, investigative reporter and co-founder of The Debrief. His writing typically focuses on defense, national security, the Intelligence Community and topics related to psychology. You can follow Tim on Twitter: @LtTimMcMillan. Tim can be reached by email: tim@thedebrief.org or through encrypted email: LtTimMcMillan@protonmail.com