A team of neuroscientists and neurosurgeons from Duke University say they have developed a promising technique for telepathic-like communication from thoughts alone by directly reading people’s thoughts.

Previous efforts have had some limited success in using brain scans to try to decode human thought. However, this technique, which uses a “speech prosthetic” brain implant that decodes those thoughts and converts them to actual speech, has proven exceptionally reliable and accurate in laboratory trials.

More research and testing is needed, but the Duke University team believes their device could dramatically improve the lives of people whose brains work normally but whose speech is limited by medical or surgical conditions.

Brain Implant Key to Communication From Thoughts Alone

“There are many patients who suffer from debilitating motor disorders, like ALS (amyotrophic lateral sclerosis) or locked-in syndrome, that can impair their ability to speak,” said Gregory Cogan, Ph.D., a professor of neurology at Duke University’s School of Medicine and one of the project’s lead researchers. “But the current tools available to allow them to communicate are generally very slow and cumbersome.”

Hoping to bridge that gap with the latest improvements in modern technology, the team looked at the viability of reading a wider range of thoughts directly from the regions of the brain associated with speech and then converting those signals back into the intended speech. Of course, some technologies like this already exist in laboratory settings, but even the best ones convert thoughts to words at a rate of around 78 words per minute. Since the average human speaks at around 150 words a minute, the researchers liken the current method to listening to a podcast at half speed all of the time.

The main limitation, according to researchers, has been the number of thought-decoding sensors one can place on the “paper-thin piece of material” lying on the surface of the associated brain regions. As with the majority of scientific endeavors, more data equals better results. In this case, more sensors can mean faster performance, resulting in something more akin to real-time communication from thoughts alone.

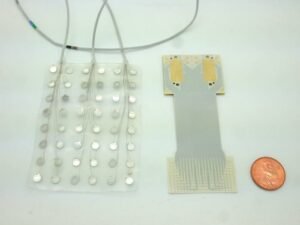

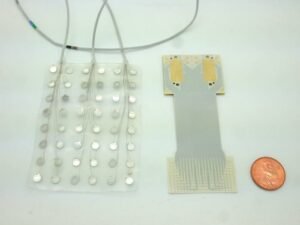

This realization led Cogan to team with fellow Duke Institute for Brain Sciences faculty member Jonathan Viventi, Ph.D., whose lab specializes in making high-density, ultra-thin, flexible brain sensors. Viventi and his lab responded with a new sensor package that packs 256 individual brain sensors on a piece of plastic the size of a postage stamp.

“Neurons just a grain of sand apart can have wildly different activity patterns when coordinating speech,” the team explains of their densely packed sensor chip, “so it’s necessary to distinguish signals from neighboring brain cells to help make accurate predictions about intended speech.

New Approach Shows Up To 84% Success at Translating Thoughts Into Actual Words

To test their new sensor chip, the team recruited four volunteers who were already scheduled to undergo brain surgery for other conditions like tumor removal or Parkinson’s disease treatments.

Next, each patient had the sensor chip placed on the surface of their brain, and then the volunteers were asked to speak out loud a series of short, nonsense words like “kug,” “ava,” or “kip.” With its dense array of sensors, the chip collected data from each volunteer’s speech motor cortex as it “coordinated” the nearly 100 muscles needed to move the lips, tongue, jaw, and larynx when forming actual speech.

After the data capture, Suseendrakumar Duraivel, the first author of the new report published in the journal Nature Communications and a biomedical engineering graduate student at Duke, fed the data into a computer loaded with a customized machine learning algorithm explicitly developed to decode these types of signals. The results were considered a measurable success.

“For some sounds and participants, like /g/ in the word “gak,” the decoder got it right 84% of the time when it was the first sound in a string of three that made up a given nonsense word,” the researchers explain of their initial success. However, they note that accuracy dropped “as the decoder parsed out sounds in the middle or at the end of a nonsense word.” The team notes that it also struggled if two sounds were similar, “like /p/ and /b/.”

Overall, the new approach got it right about 40% of the time. The researchers point out that although this number may sound low, “it was quite impressive given that similar brain-to-speech technical feats require hours or days-worth of data to draw from.” In contrast, their approach had only about 90 seconds of data from each patient’s 15-minute long test.

Huge Financial Grant Could Accelerate System to Practical Use

Moving forward, the team says that along with improving their conversion accuracy with more data and more testing, they are also hoping to make a “cordless” version of the system that removes the external wires connecting to the patient. In fact, they have already received a $2.4 million grant from the National Institute of Health to pursue those improvements.

“We’re now developing the same kind of recording devices, but without any wires,” Cogan said. “You’d be able to move around, and you wouldn’t have to be tied to an electrical outlet, which is really exciting.”

Still, the ultimate goal is a commercially available system that can enable communication with thoughts alone. And according to the researchers, they think it is a very attainable goal.

“We’re at the point where it’s still much slower than natural speech,” Viventi said in a recent Duke Magazine story about the technology, “but you can see the trajectory where you might be able to get there.”

Christopher Plain is a Science Fiction and Fantasy novelist and Head Science Writer at The Debrief. Follow and connect with him on X, learn about his books at plainfiction.com, or email him directly at christopher@thedebrief.org.