A Stanford University researcher claims facial recognition software can reasonably predict a person’s political affiliation based solely on facial features.

In the study published in the Nature journal Scientific Reports, Dr. Michal Kosinski says facial recognition algorithms could be used to reasonably predict people’s political views. Using over 1 million Facebook and dating site profiles from users based in Canada, the U.S., and the U.K., Kosinski says his algorithm could correctly identify one’s political orientation with 72% accuracy.

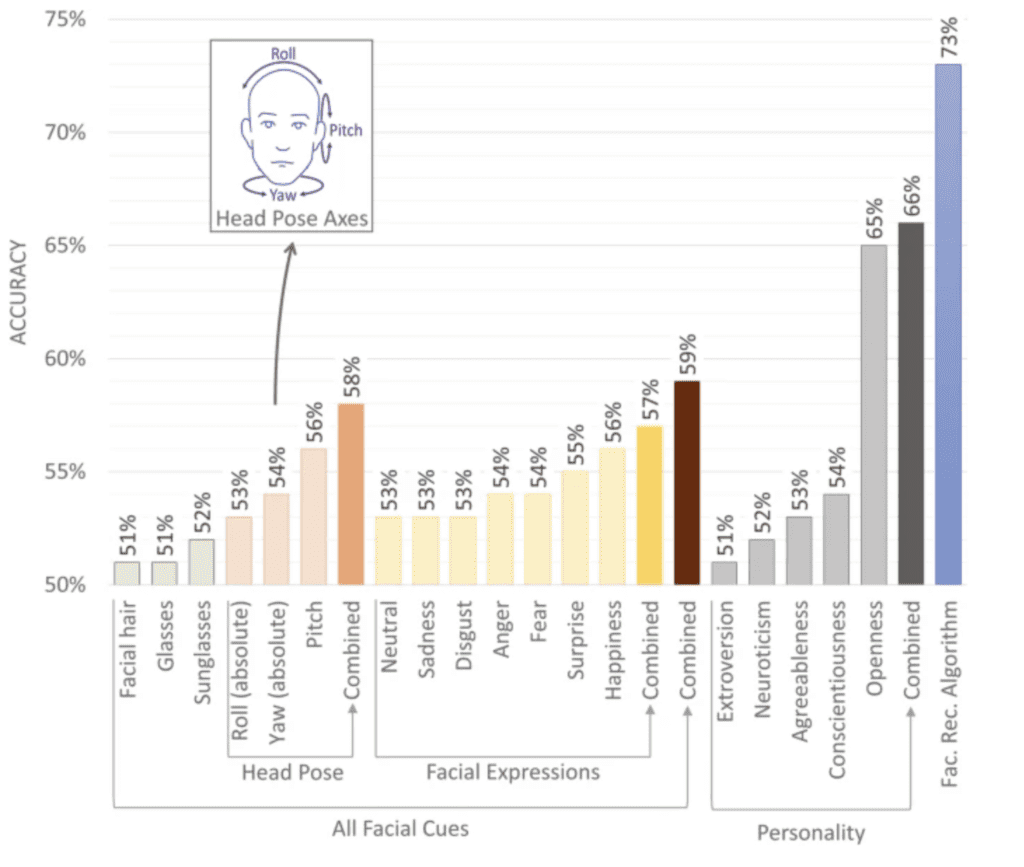

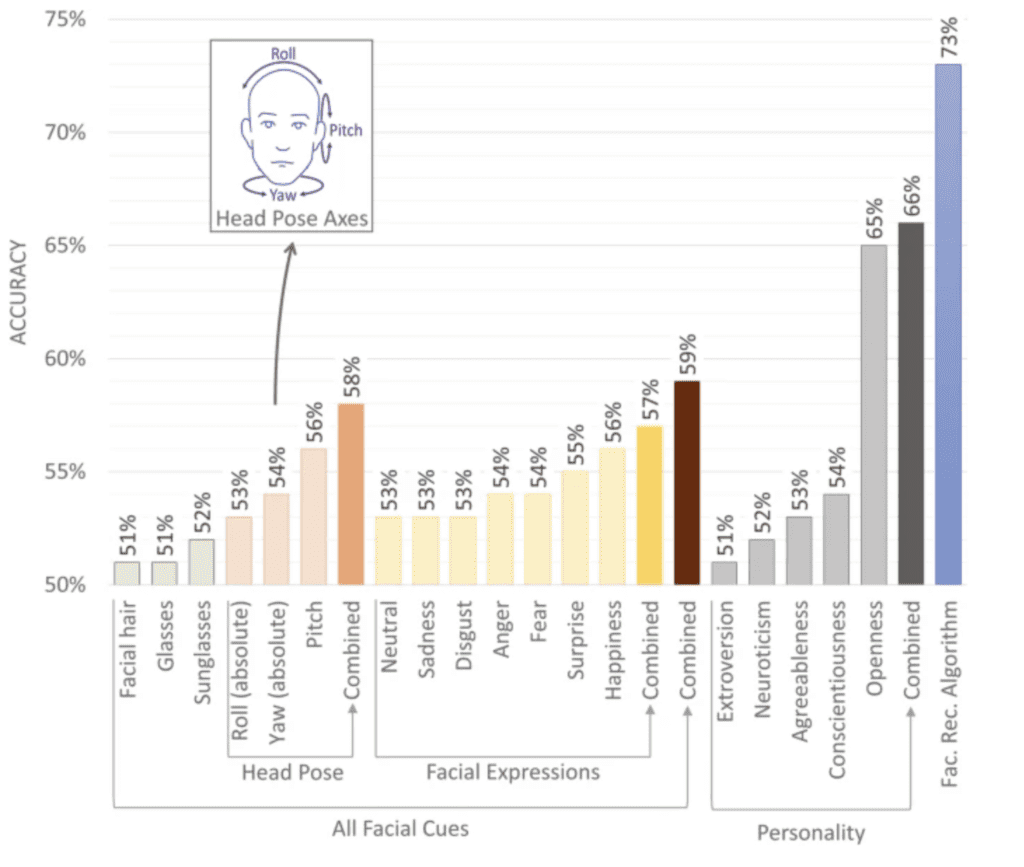

“Political orientation was correctly classified in 72% of liberal–conservative face pairs, remarkably better than chance (50%), human accuracy (55%), or one afforded by a 100-item personality questionnaire (66%),” Kosinski notes in the study.

Detecting a person’s political leanings isn’t the first controversial claim Kosinski has made about facial recognition. In 2017, the Stanford Graduate School of Business professor published a highly-contentious study claiming facial recognition software could determine a person’s sexual orientation.

Critics of Kosinski’s work say his research is based on the pseudoscientific concept of physiognomy. Going back to Ancient Greece, the practice of physiognomy claims it is possible to determine someone’s personality or character based solely on their physical appearance. Detractors point to the dangers of physiognomy and its uses in the 18th and 19th centuries for “scientific racism” to justify racial discrimination.

In response to criticisms, Kosinski notes, “Physiognomy was based on unscientific studies, superstition, anecdotal evidence, and racist pseudo-theories. The fact that its claims were unsupported, however, does not automatically mean that they are all wrong.”

Using Facial Recognition For politics

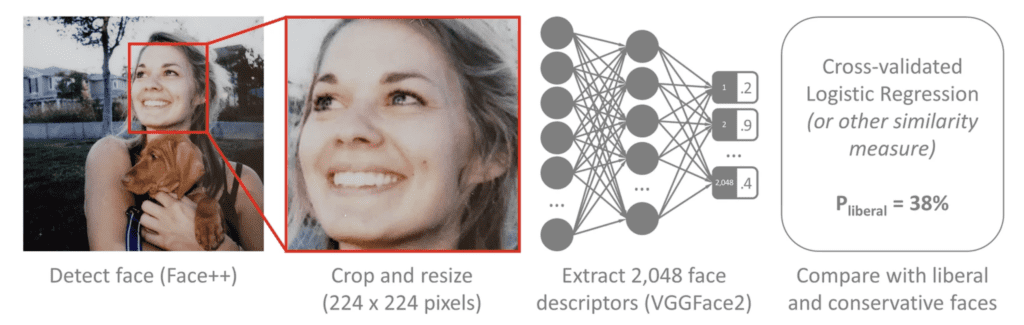

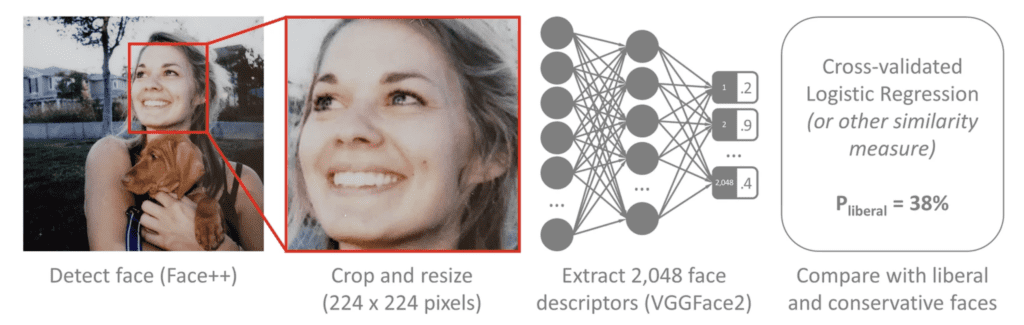

A sample of 1,085,795 participant images and self-reported answers on political orientation, age, and gender, were used for the study. Rather than developing an algorithm aimed explicitly at political orientation, Kosinski says he used an existing open-source facial-recognition software. Facial descriptors were “compared with the average face descriptors of liberals versus conservatives” to predict a sample’s political leanings.

According to Kosinski, results showed that facial recognition software could reliably predict a person’s political orientation, an average mean of 71.5% for entire sample sets, and 68% of the time when control samples of the same demographic groups (age, gender, and ethnicity) were paired together.

“High predictability of political orientation from facial images implies that there are significant differences between the facial images of conservatives and liberals,” Kosinski concludes.

Pseudoscience or Legitimate Privacy Concern?

Princeton neuroscientist Alexander Todorov argues methods used by Kosinski in his facial recognition research are inherently flawed. A previous critic of Kosinski’s work, Todorov points out patterns picked up by a facial recognition algorithm comparing millions of photos may have very little to do with facial characteristics. Instead, the software could be focusing on a host of other non-facial clues often evident in self-posted images used on social media or dating sites.

According to Kosinski, “to minimize the role of the background and non-facial features, images were tightly cropped around the face and resized to 22 x 224 pixels” Additionally, a program called VFFFace2 was used to convert facial image into 2,048-value-long vectors or face descriptors.

Nevertheless, Todorov has called Kosinski’s research “incredibly ethically questionable.” Other science writers have called Kosinski’s research “outlandish,” “BS,” and “Hogwash.”

For his part, Kosinski says his work isn’t to advocate the development of facial recognition programs to predict someone’s political affiliation or sexual orientation. Instead, Kosinski asserts his goal is to warn of privacy concerns related to digital footprints people leave on the web. “Even modestly accurate predictions can have a tremendous impact when applied to large populations in high-stakes contexts, such as elections. For example, even a crude estimate of an audience’s psychological traits can drastically boost the efficiency of mass persuasion.”

While current claims that facial recognition can determine political identity seem controversial, there is potentially reason not to dismiss Kosinski’s concerns outright.

In 2013, a paper co-authored by Kosinski found that one could use people’s “likes” on Facebook to predict their characteristics and personality traits accurately. Kosinski and his co-authors warned of the “implications for online personalization and privacy” of their results.

Reportedly, Kosinski’s 2013 findings inspired the creation of the highly-controversial conservative data firm Cambridge Analytica. In 2018, Cambridge Analytica closed operations after it was revealed the firm had been using Facebook data, in the same manner Kosinski had warned, to influence hundreds of elections globally, including the 2016 U.S. Presidential Election and U.K.’s withdrawal from the European Union.

Regarding Kosinski’s recent facial recognition study, Triston Greene of The Next Web writes, “This latest piece of silly AI development from the Stanford team isn’t dangerous because it can determine your political affiliation. It’s dangerous because people might believe it can, and there’s no good use for a system designed to breach someone’s core ideological privacy, whether it works or not.”