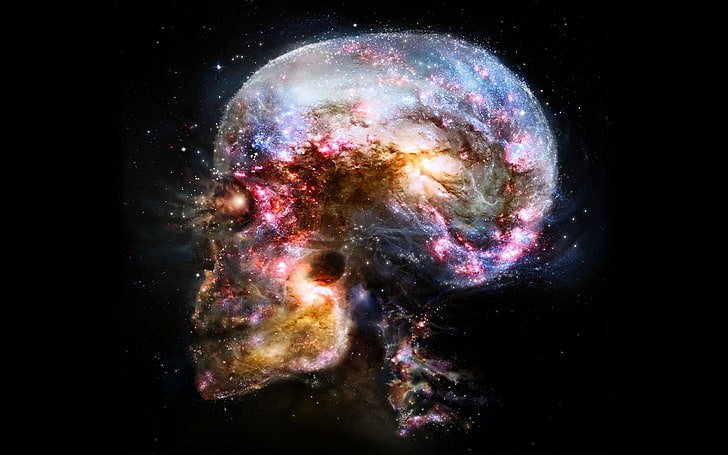

At the roots of reality exists a complex system of features and processes which we call nature. Loosely defined, nature is the phenomena that comprises the physical world as we know it… and nobody ever said it was anything less than complex.

However, new research may take the intricacies of the natural world to a new level of complication, even going so far as to suggest that nature itself may effectively function as a neural network.

A simplified definition of a neural network describes a collection of algorithms that works in ways that mimic the way a brain—generally expected to be one belonging to a human—works. In a new paper by a Vitaly Vanchurin with the Department of Physics at the University of Minnesota, Duluth, the author examines the bold proposition that our entire universe essentially functions as a neural network.

Vanchurin’s study builds much of its premise off of quantum mechanics, a paradigm he says is “so successful that it is widely believed that on the most fundamental level the entire universe is governed by the rules of quantum mechanics and even gravity should somehow emerge from it.”

In his paper, Vanchurin provides a mathematical rationale for why he not only thinks that the universe behaves like a neural network, but that this is how it literally works, in accordance with quantum mechanics.

“We are not just saying that the artificial neural networks can be useful for analyzing physical systems or for discovering physical laws,” Vanchurin writes. “[W]e are saying that this is how the world around us actually works.”

Vanchurin’s paper, which has already managed to arouse controversy among physicists and experts in machine learning, primarily argues that equations in quantum mechanics provide a reasonable analog for the behavior of systems near equilibrium, while a system further from equilibrium compares well to the equations of classical mechanics. On account of this, while Vanchurin acknowledges that this might be an adventitious feature of nature, he nonetheless says that this compliments our current understanding of the actual workings of nature and the physical world as we observe them.

Building on this, Vanchurin also addresses the disconnect between quantum and classical mechanics, as manifested in the lingering problem of quantum gravity. Add to this the role of observers, or as it is known in quantum mechanics, the measurement problem. This involves the question of how—or even if—wave function collapse occurs, as well as our inability to observe such phenomena directly.

Arguably the most famous example that demonstrates this is the famous double-slit experiment, first performed by Thomas Young in 1801, which helped determine that light can rather counterintuitively display behaviors of both waves and particles. The experiment was performed again in 1927 by Clinton Davisson and Lester Germer, although this time it was shown that electrons behave in the same bizarre fashion; atoms and molecules are also understood to be capable of the same.

However, rather than merely looking at uniting the general relativistc and quantum worlds, Vanchurin argues that perhaps a third phenomenon deserves to be incorporated as well: that of observers. In his paper, Vanchurin considers the possibility that a proposed “microscopic neural network” may serve as the fundamental structure from which all other phenomena in nature—quantum, classical, and macroscopic observers—emerges.

The paper builds off of a previous paper, “Towards a theory of machine learning”, in which Vanchurin employed statistical mechanics examine neural networks. From this work, Vanchurin first became aware of some of the corollaries that appear to exist between neural networks and the dynamics of quantum physics. Building off of the observations of Lars Onsager, David Hilbert and Albert Einstein, Vanchurin says that “the learning dynamics of a neural network can indeed exhibit approximate behaviors described by both quantum mechanics and general relativity, and that there is “a possibility that the two descriptions are holographic duals of each other.”

So how likely is it that the entire universe actually is essentially a neural network?

“This is a very bold claim,” Vanchurin concedes in his paper. “We are not just saying that the artificial neural networks can be useful for analyzing physical systems or for discovering physical laws, we are saying that this is how the world around us actually works.”

What, precisely does that mean? According to Vanchurin, “it could be considered as a proposal for the theory of everything,” and at the heart of Vanchurin’s idea is the notion that since all things in nature are functionally a neural network, disproving the idea would simply require finding phenomenon that cannot be modeled in such a way.

“[A]s such,” he writes, “it should be easy to prove it wrong. All that is needed is to find a physical phenomenon which cannot be described by neural networks.”

“Unfortunately (or fortunately),” he says, “it is easier said than done. It turns out that the dynamics of neural networks is so complex that one can only understand it in very specific limits. The main objective of this paper was to describe the behavior of the neural networks in the limits when the relevant degrees of freedom (such as bias vector, weight matrix, state vector of neurons) can be modeled as stochastic variables which undergo a learning evolution.”

So through proposing a theory of machine learning and the study of quantum mechanics, general relativity, and the unique role of the macroscopic observer, Vanchurin arrives at the possibility that nature itself may function as a neural network, and thereby hint at a possible “theory of everything,” albeit a provisional one.

Vanchurin’s paper, “The world as a neural network,” can be read online in its entirety at the preprint arxiv.org website.