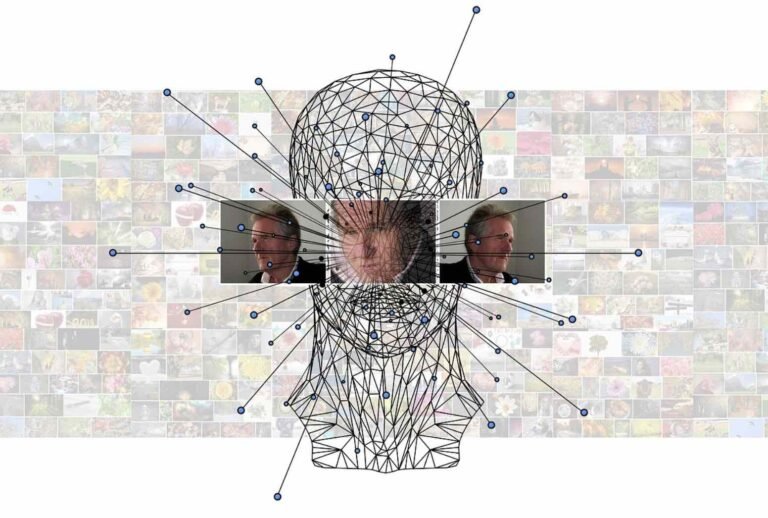

Though facial recognition technology is used by police departments, governments, and even online proctoring services, it has little scientific basis and often leads to discriminatory and harmful outcomes. In 2020, research in Nature Communications claimed that changes in historical trustworthiness could be tracked through a machine learning algorithm that assessed facial cues in paintings.

Only eight days after the study was published, the journal added an editor’s note, indicating that some of the criticisms against the paper are under further review. The editors at Nature Communications are still reviewing and reconsidering the study, almost two years later.

In a response to The Debrief about the status of that review process, a representative for the publication wrote that it is still ongoing.

“Whenever concerns are raised about any paper we have published, we look into them carefully, following an established process, consulting the authors and, where appropriate, seeking advice from peer reviewers and other external experts,” said a spokesperson for Nature Communications in an email.

“Once such processes have concluded and we have the necessary information to make an informed decision, we will follow up with the response that is most appropriate and that provides clarity for our readers as to the outcome,” the spokesperson added.

“In this instance, the process is ongoing,” the spokesperson said, noting they would be willing to provide additional details at a future time “when further information is available.”

Rory Spanton, a Ph.D. researcher at the University of Plymouth, and Olivia Guest, Ph.D., an assistant professor at Radboud University, recently wrote a critique of the study that is now under consideration by the journal. The premises and methods used to develop the trustworthiness algorithm hinge on flawed and racist premises.

“Trustworthiness isn’t a very stable construct between individuals or between societies,” Spanton explained. “What one person finds to be a hallmark of trustworthiness could well be completely differently interpreted by another individual.”

Even if the algorithm was trained to embody how participants rated the trustworthiness of faces in paintings, it does not mean “the algorithm is scientific”, Spanton explained. Spanton and Guest brought up that the use of facial features to identify trustworthiness is rooted in racist and pseudoscientific practices called physiognomy.

Physiognomy gained its popularity in the 18th and 19th centuries and was weaponized by Nazis during the Second World War. Research doesn’t even support the idea that we can make accurate character judgments.

“There’s always been these sort of marginal scientists doing this kind of pseudoscientific work,” Guest said. “But now it meets the hype of machine learning and artificial intelligence.” In the past few years, researchers have made similarly erroneous claims about facial features these studies automate physiognomy to “predict” attractiveness, sexual orientation, and even criminality.

In addition to the use of a pseudoscientific premise, Spanton and Guest identified statistical issues within the paper as well as selection bias. “That’s the added level of surrealism in this in this in this paper,” Guest said, explaining that the study looked at facial features in portraits of mostly white individuals. The portraits themselves are a romanticized portrayal. Even participant ratings only weakly correlated to the output from the algorithm, meaning it wasn’t even effective at automating human bias.

The hype surrounding these technologies allows these studies to proliferate and legitimizes their deployment. “While the algorithm that we critique specifically hasn’t been used or sold, in a commercial sense,” Spanton added. “I think there is a real precedent and the history of similar algorithms being packaged.” Faulty facial recognition has real consequences that have led to wrongful incarceration and used by repressive regimes to track ethnic minorities.

A technology based on the racist pseudoscience of physiognomy can’t be objective, but the ethics and history of psychology are seldom taught. “A greater engagement with ethics and then the history of these more problematic pseudoscientific practices would be a really good start in allowing researchers and funders to critique this kind of work,” said Spanton.

Even though pseudoscience is the premise behind many facial recognition applications, it continues to be developed and adopted. Buzzwords like machine learning and artificial intelligence continue to garner million-dollar investments and clickbait headlines.

Simon Spichak is a science communicator and journalist with an MSc in neuroscience. His work has been featured in outlets that include Massive Science, Being Patient, Futurism, and Time magazine. You can follow his work at his website, http://simonspichak.com, or on Twitter @SpichakSimon.