Robots are increasingly able to perform more complicated tasks. Some dance, climb stairs, operate in swarms, and others may one day even explore caves on the Moon. But as these abilities expand, so do the programming requirements of the robot’s operating system.

Now, a research team from the Soft Robotic Matter group at the Netherlands Institute for Atomic and Molecular Physics (AMOLF) has invented a self-learning robot that is designed to be as simple as possible, with no preprogrammed information other than a single basic algorithm, while still maintaining the ability to adapt to changing circumstances.

“This is a new way of thinking in the design of self-learning robots,” explained chief researcher Dr. Bas Overvelde in a press release announcing his team’s results. “Unlike most traditional, programmed robots, this kind of simple self-learning robot does not require any complex models to enable it to adapt to a strongly changing environment. In the future, this could have an application in soft robotics, such as robotic hands that learn how different objects can be picked up or robots that automatically adapt their behavior after incurring damage.”

Background: What are Self-Learning Robots?

From self-driving cars and drone delivery to robotic surgery and automotive assembly lines, robotics are increasingly a part of our everyday lives. However, as these systems become more complex, they must be preprogrammed with more and more information to accomplish those tasks. For robots like self-driving cars that seek to approximate or even improve on the performance of their human counterparts, this increasing complexity has caused repeated delays in them becoming common on our roads.

Hoping to take robots back to basics, Overvelde and his team wondered if a train of robots, without communicating, could work together to race around a track at maximum speed.

“We asked ourselves the questions, what are the minimum requirements to make a system that can adapt to the environment,” Overvelde told The Debrief. “We focused here on making the algorithm and hardware requirements as simple as possible. What is important is that our algorithm doesn’t require any prior information of the environment and of itself, so it doesn’t need a model.”

Analysis: Self-Learning Robots Went Off To The Races?

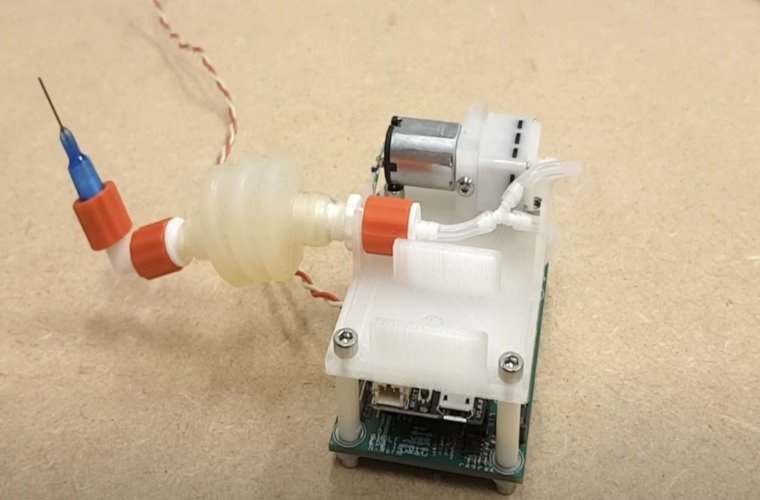

As described in the scientific journal PNAS, the AMOLF team started by constructing a simple robot with as little material and programming as possible. This meant a 3-D printed PLA (plastic) body, silicone elastomers for the actuators, some basic electronic components such as a microcontroller and a mouse sensor, a pump, and bellows for motion, and finally, some metal screws to keep everything together. Each robot was constructed individually and was programmed to operate alone.

“The robots were only connected physically to each other,” Overvelde told The Debrief. “So there was no communication other than pushing and pulling due to the forces of the soft actuators.”

To move, each robot had the ability to pump air into a bellows and then release the air through a needle to create motion. “We wanted to keep the robots as simple as possible, which is why we chose bellows and air,” said Ph.D. student Luuk van Laake, who was also part of the AMOLF team. “Many soft robots use this method.”

Once each robot was built, they were programmed with a simple algorithm designed to use basic feedback to determine the best speed each robot could accomplish on a track and nothing else.

“The only thing that the researchers do in advance is to tell each robot a simple set of rules with a few lines of computer code (a short algorithm): switch the pump on and off every few seconds – this is called the cycle – and then try to move in a certain direction as quickly as possible,” the press release explains. “The chip on the robot continuously measures the speed. Every few cycles, the robot makes small adjustments to when the pump is switched on and determines whether these adjustments move the robotic train forward faster. Therefore, each robotic cart continuously conducts small experiments.”

As the research team had hoped, each of the individual robots ‘ pushing and pulling translated across the chain, and before long, the entire train had achieved its best speed without any overt communication.

“If you allow two or more robots to push and pull each other in this way,” the press release explains, “the train will move in a single direction sooner or later. Consequently, the robots learn that this is the better setting for their pump without the need to communicate and without precise programming on how to move forwards. The system slowly optimizes itself.”

As a further test of the system’s ability to adapt to unexpected circumstances, the team sabotaged one of the robots in the chain by removing the needle that allows it to control the release of air. And according to Overvelde, what they found was unexpected.

“We thought that this robot would not contribute anymore to the behavior of the whole system,” Overvelde told The Debrief. “However, what we saw is that this robot still had an effect (we think the friction of the robot depended on when the pump was on). So apparently, the whole robot [train] was still able to exploit this. This was a nice, yet unexpected demonstration of the power of our algorithm.”

Outlook: These Robots Can Evolve?

Following their successful tests, Overvelde told The Debrief that he, Van Laake, and the third team member Giorgio Oliveri would likely try even more robotic systems programmed with their algorithm, including some based on existing biological motion like that of an octopus or even a starfish.

“We are now building robots that can deform on a surface, so in 2D. We are also building soft robots where each leg of a starfish-like robot will have their own brain (run the algorithm).”

Of course, Ovrvelde cautioned that even though his team’s robots use this form of simple interaction to ultimately find the best way to work together, seemingly similar systems in nature may have achieved this ability through years of trial and error. But, he notes, his type of individual-yet-collective system may also be achieving optimal results over time in more or less the same way.

“What you likely see in many natural collective systems [like bees or ants] is that they exhibit emergent behavior, and evolution has provided the necessary adaptation to the rules to make the emergent behavior robust. In our system, we have not used evolution but use this “trial-and-error” approach. So in a way, our robot is evolving as it is operating.”

As far as the main takeaway from building these simplistic self-learning robots that work together in such an efficient way and how they may be put to use, Overvelde told The Debrief he sees that fundamental simplicity as the most enduring benefit, and one he hopes his colleagues in this growing field can use.

“Important here is that we realize that the robot and how it moves might not be very impressive, but our contribution is really about the algorithm (simple rules) that we implemented. I am curious if other roboticists will be able to exploit our algorithm in a more complex system.”

Follow and connect with author Christopher Plain on Twitter: @plain_fiction

Don’t forget to follow us on Twitter, Facebook, and Instagram, to weigh in and share your thoughts. You can also get all the latest news and exciting feature content from The Debrief on Flipboard, and Pinterest. And subscribe to The Debrief YouTube Channel to check out all of The Debrief’s exciting original shows: The Official Debrief Podcast with Michael Mataluni– DEBRIEFED: Digging Deeper with Cristina Gomez –Rebelliously Curious with Chrissy Newton