A new study from the Columbia University School of Engineering and Applied Science has proven that a robot can anticipate another robot’s actions based solely upon visual cues. This means that robots may one day be able to use empathy to make predictions in a very basic way.

Using a cognitive framework called “Theory of Mind,” the scientists believe that they’ve come across one of the very first stepping stones for artificial intelligence to understand how to anticipate actions purely through visualization.

“Autonomous robots are making incredible strides — from driverless cars to self-guided drones, all of us should care about robots—they’re going to worm their way into every aspect of our lives,” Dr. Hod Lipson, a mechanical engineering professor and co-author on the study told The Debrief. “But there’s a catch.”

Robots are “socially awkward” and have a very long way to go, but this study seems to indicate that one day, they may be able to predict exactly what humans want.

BACKGROUND: Can a Robot Have Empathy?

While AI has been making predictions about human needs for a while now, those systems use previous user actions to anticipate behavior. Moreover, they are pretty awful at it.

Columbia Engineering’s Creative Machines Lab, led by Mechanical Engineering Professor Hod Lipson, is attempting to provide a robotic system with the ability to understand visual cues to make predictions. Using human and primate social interaction as a model, the team seeks to figure out how to convey a similar cognitive model upon robots.

Humans who know each other have a knack for guessing what the other person is going to do next. Reasonably quickly, humans who work and live near each other can often predict others’ actions and even thoughts. It is a critical biological and evolutionary tool that has kept us alive since our earliest days.

ANALYSIS: How to Prove a Robot Can Be Empathetic?

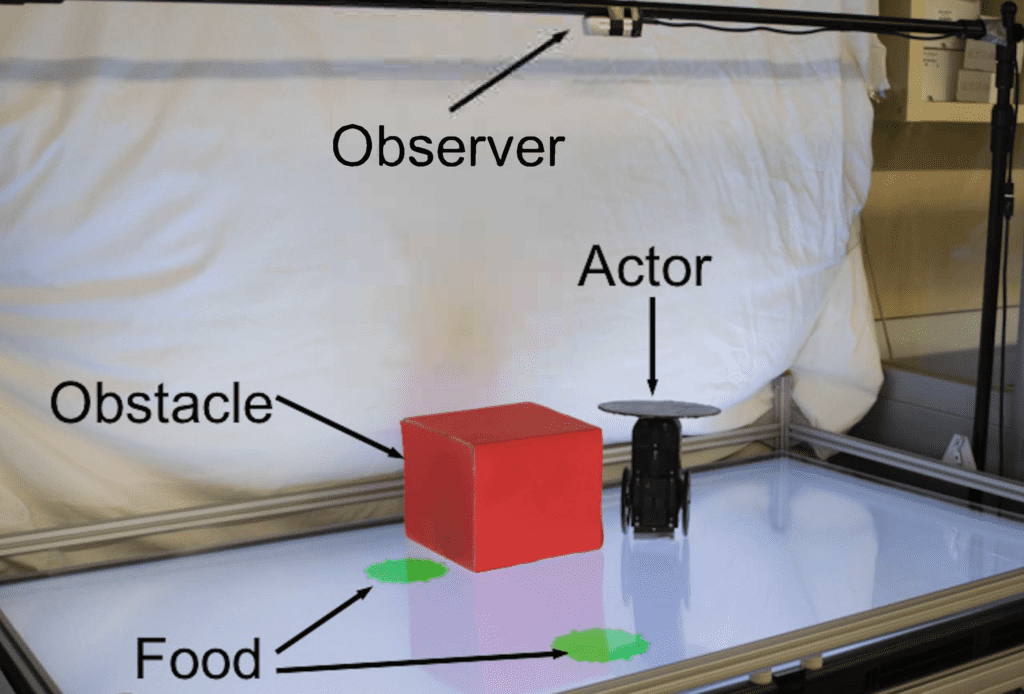

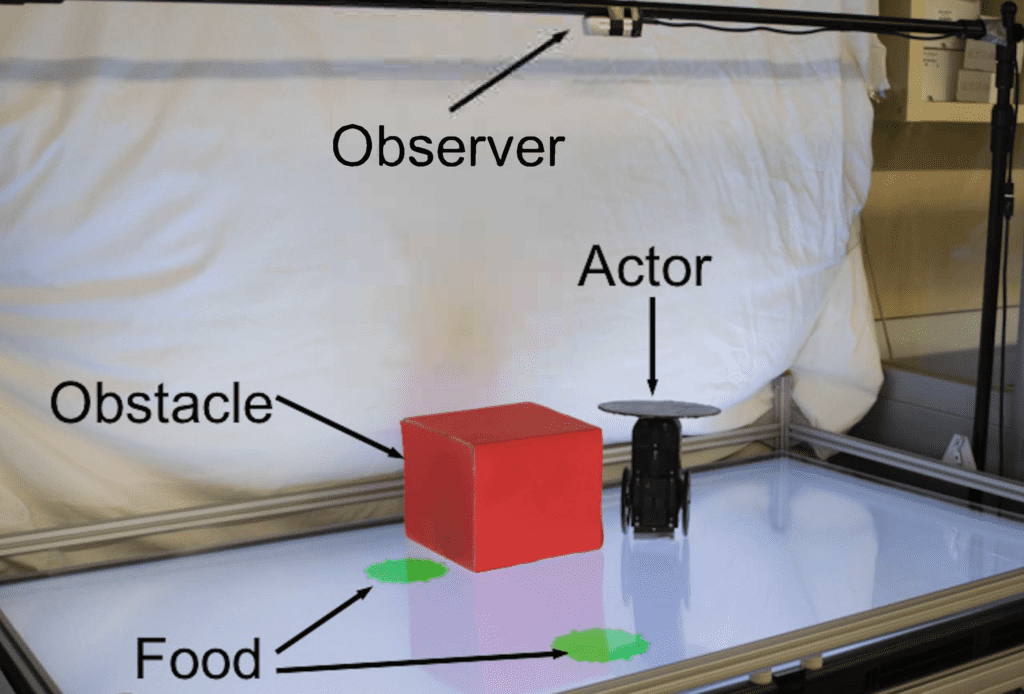

The experiment occurred in a small playpen area where a robot is programmed to seek green circles and move towards them. The green circles would be placed so that, at times, the robot could see them with its camera, and sometimes a red box would obstruct the view, and the robot would be unable to see them. At times, the robot would seek out another green circle or not move at all. The team then built an observer robot. The observer robot simply watched the seeker robot go around and find green circles. After two hours, the observer robot could anticipate which green circle the seeker robot would travel to and the exact route it would take to get there.

With alarming accuracy, the observer robot could eventually correctly predict the seeker’s target circle and route 98 out of 100 times. As the team altered the situation by moving the circles and obstacles, the observer continued to make its near-perfect predictions. Eerily, the observer robot was never “told” about the seeker’s visibility handicap concerning the red box and just figured it out on its own.

“Our findings begin to demonstrate how robots can see the world from another robot’s perspective. The ability of the observer to put itself in its partner’s shoes, so to speak, and understand, without being guided, whether its partner could or could not see the green circle from its vantage point, is perhaps a primitive form of empathy,” explained Boyuan Chen, the study’s lead author in a statement.

The research team admits that the observer robot is still at an elementary level of visual predictive ability. Still, the fact that it accurately predicted future moves based upon only a few seconds of visual observation exceeded expectations.

Toddlers and preschool children develop the ability to use visual observation to make similar predictions. They realize that others may have different needs and goals, and they can often ascertain what those goals may be based upon little more than simple visualization or verbal cues. This cognitive framework is known as the Theory of Mind.

As humans grow older, this development leads to sympathy and empathy and our uncanny ability to often know what someone else may be thinking with a simple look. Moreover, it can lead to cooperation for achieving larger shared but challenging goals, yet it also allows us to deceive and manipulate one another.

OUTLOOK: Robot Empathy Will Bring About A Strange Future

The study indicates that robots may be at the beginning of some great transformation, but their predictive ability is far from ‘human.’

The ethical questions, especially in the future, require serious consideration. Should this research be improved upon, and robots become adept at making predictions based upon visual cues, they could become more useful tools. Robots that are able to observe one another’s performance and then make corrections to that performance in real-time would make them incredibly efficient.

“Robots have a hard time understanding and predicting what other intelligent agents around them – both humans and other robots – are going to do. In other words, robots are somewhat socially awkward. That’s why your robotic vacuum cleaner doesn’t get out the way when you’re walking down the hall, or doesn’t make extra effort to clean a spot you seem to care about. Once robots understand what others around them are doing and planning, they can cooperate better,” Lipson told The Debrief.

That being said, it would be possible for a robot to guess what a human is thinking by a simple glance and then proceed to use manipulation or deception to achieve a goal.

Is it possible to be ‘gaslighted’ by a robot?