A new United Nations report suggests that a long-anticipated, highly-concerning debut in military technology was made last year during Libya’s civil war: the use of an AI weapon. The U.N. report described an aerial drone used by Libya’s government against militia forces as a “lethal autonomous weapons systems,” that was “programmed to attack targets without requiring data connectivity between the operator and the munition: in effect, a true ‘fire, forget and find’ capability.”

The U.N. report details an AI weapon attacking humans–a stunning warfare precedent that should inspire fervent debate.

Background: Autonomous AI Drones

Drone warfare is not new. And aerial drones have been in vogue for almost two decades–a staple of U.S. foreign policy through the Bush, Obama, and Trump administrations. Increasingly, foreign powers, as well as non-state actors, are developing and deploying aerial drones.

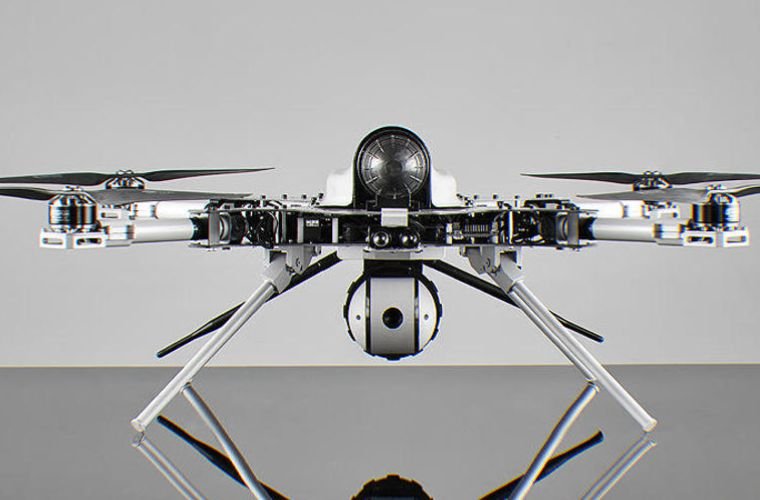

STM, a Turkish company that designs military technology (among other things), created the KARGU-2 drone for use in the Turkish Armed Forces. But Turkey has also exported the KARGU-2 to their allies, including the Libyan government, who used the KARGU-2 last year during a conflict against militia forces.

While retreating, the militia “were subject to continual harassment” from the KARGU-2; they “were hunted down and remotely engaged,” the U.N. report said. An aerial drone harassing and hunting enemy combatants is not significant. What is significant is that the KARGU-2 has an “autonomous” mode.

A rotary-wing attack drone designed for asymmetric warfare and anti-terrorist operations, the “KARGU can be effectively used against static or moving targets through its indigenous and real-time image processing capabilities and machine learning algorithms embedded on the platform,” the STM website says.

Of course, what real-time image processing and machine learning equate to is artificial intelligence. And the use of artificial intelligence in warfare raises pressing ethical and legal issues.

Analysis: Was A Truly Autonomous Weapon Used?

The U.N. report left some questions unanswered. Clearly, a drone was used in the attack. “What’s not clear is whether the drone was allowed to select its target autonomously and whether the drone, while acting autonomously, harmed anyone. The U.N. report heavily implies, but does not state, that it did,” Zachary Kallenborn, an expert on drone warfare at the University of Maryland, wrote in the Bulletin of the Atomic Scientists.

“If anyone was killed in an autonomous attack, it would likely represent an historic first known case of artificial-intelligence-based autonomous weapons being used to kill,” Mr. Kallenborn wrote.

At present, autonomous weapon development is entirely unregulated. If the KARGU-2 did autonomously hunt and kill militia forces last year, then AI military technology is already being used, without an existing framework of regulatory oversight–a “catastrophic” development, said James Dawes, a professor at Macalester College, to the New York Times last week.

“AI drone development is adding to global alarm,” Jamie Dettmer writes in Voice of America. Human rights groups are particularly concerned with the ongoing development of AI-driven weapons. “Human control and judgment in life-and-death decisions is eroding, potentially to an unacceptable point,” Mary Wareham, the arms advocacy director at Human Rights Watch, wrote to the New York Times. Wareham wrote that countries “must act in the interest of humanity by negotiating a new international treaty to ban fully autonomous weapons and retain meaningful human control over the use of force.”

Outlook: Now What?

The race to develop the leading AI autonomous weapon is on, and if a middle power like Turkey can create a sophisticated autonomous drone, what can China, Israel, or the U.S. come up with? Or, perhaps the better question is, what have they already come up with?

Whether the KARGU-2 was used autonomously last year or not, AI weaponry is poised to become a centerpiece of the 21st-century battlefield. And in all likelihood, the international community will have to craft policy retroactively for weapon systems that are already being deployed, and perhaps more frighteningly, systems that are making their own decisions.

Follow and connect with author Harrison Kass on Twitter: @harrison_kass

Don’t forget to follow us on Twitter, Facebook, and Instagram, to weigh in and share your thoughts. You can also get all the latest news and exciting feature content from The Debrief on Flipboard, and Pinterest. And subscribe to The Debrief YouTube Channel to check out all of The Debrief’s exciting original shows: DEBRIEFED: Digging Deeper with Cristina Gomez –Rebelliously Curious with Chrissy Newton