According to a new study by a research group at the Max-Planck Institute for Humans and Machines, the actions of superintelligent AI may be hard to predict, making them uncontrollable.

No, this isn’t a precursor to “The Terminator.” Still, according to the group’s work published in the Journal Of Artificial Intelligence Research, in order to safely predict an AI’s actions in the real world, an exact simulation of the intelligence would have to be made in an artificial world, which is currently impossible.

BACKGROUND: AI is Pretty Common

Super intelligent robotics is a part of everyday life; AI creates stimulations, generates memes, composes music, and tech companies like Amazon, Apple, and Google are actively incorporating more AI-based integration into their businesses.

According to the co-author of the study Manuel Cebrian, there are already machines that can independently perform tasks without the programmers knowing how they learned the behavior.

A congressional advisory panel led by former Google CEO Eric Schmidt has discussed whether the U.S. should use “intelligent autonomous weapons” and concluded the idea itself was a “moral imperative.” The two-day panel opposed joining an international committee on banning the development of these weapons. According to Reuters, the panel, which was vice-chaired by former Deputy Secretary of Defense Robert Work, wants Congress to not close the door on the idea of intelligent autonomous weapons. The panel argued that these smart weapons might be better killers and more accurate, which would lead to less collateral damage. Critics like the Campaign To Stop Killer Robots argue scenarios that explain the weapons could be hacked, used by terrorists, and used to jump-start even larger conflicts. The U.S. is already practicing the “ethical” use of autonomous tanks, according to Gizmodo.

ANALYSIS: AI Weapons and Losing Control

It’s no surprise that the U.S. is bucking the international trend regarding a committee to ban these weapons. As defense continues to be a focal point of U.S. government funding and other nations like France and China upping their own AI games, the U.S.could bypass the moral arguments and make for a proliferation in autonomous weapons. France’s military ethics committee OK’d the development of “augmented soldiers,” as China’s push for futuristic militaristic “biologically -enhanced capabilities” has drawn the criticism of John Ratcliffe, U.S. Director of National Intelligence.

“This points to the possibility of machines that aim at maximizing their own survival using external stimuli, without the need for human programmers to endow them with particular representations of the world,” the authors of the study wrote. “In principle, these representations may be difficult for humans to understand and scrutinize.

The issue moves beyond basic robotics, as a superintelligent computer will be asserting its needs to survive. Part of the problem is that these needs, or how the AI achieves them, may fall outside human understanding.

“This is because a superintelligence is multi-faceted, and therefore potentially capable of mobilizing a diversity of resources in order to achieve objectives that are potentially incomprehensible to humans, let alone controllable,” the study stated.

This isn’t just an issue for the military, as the everyday use of robotics and the future of STEM curriculum in schools will all be looking at what is possible.

“I think that superintelligent robots will pose a threat; not now, not next year, but at some point in the future,” said Glenn Winstryg, an expert in robotics and STEM education. “We keep developing robots to be able to adjust to their environment and various stimulants to be able to do their job more efficiently, but this will eventually lead them to be able to control themselves and add or remove parts that they deem necessary in order to do their jobs. This, in turn, could lead to them being able to determine what jobs may or may not be necessary, and whether or not humans may be necessary.”

OUTLOOK: Wrestling our AI Creation?

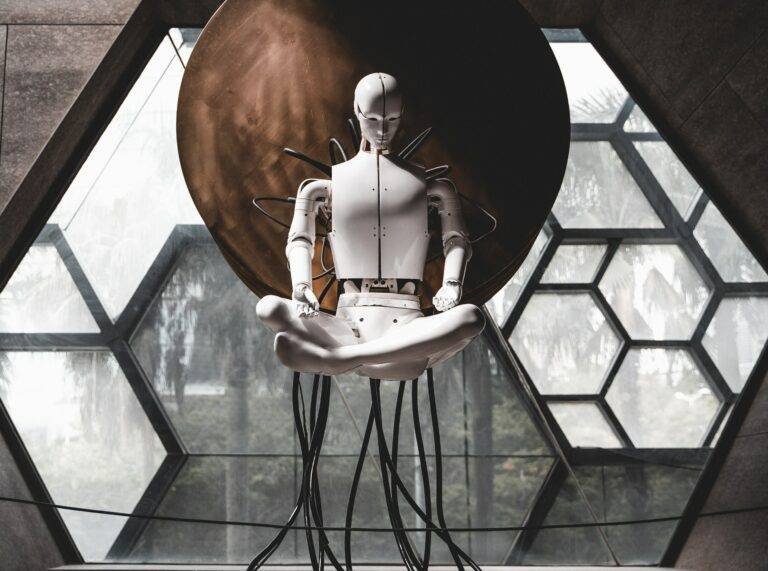

The world seems to be working towards bio-enhanced soldiers, “Terminator”-like weaponry, and advanced AI that could take over humanity à la Isaac Asimov’s science fiction novel “I, Robot.”

These tropes that we’ve seen play out in movies and science fiction literature are slowly coming to fruition in front of our very eyes. Humanity has seen the advances in AI and the self-sufficiency associated with software and hardware in our everyday life. Will the moral aspect of the debate continue as nations continue to ramp up their super robots?

While we still are a ways off from the robot apocalypse, attempting to create a superintelligent AI may have us one day wrestle with our creation.